Table of Contents

Impedance Matching

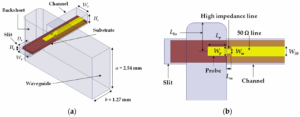

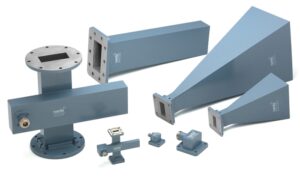

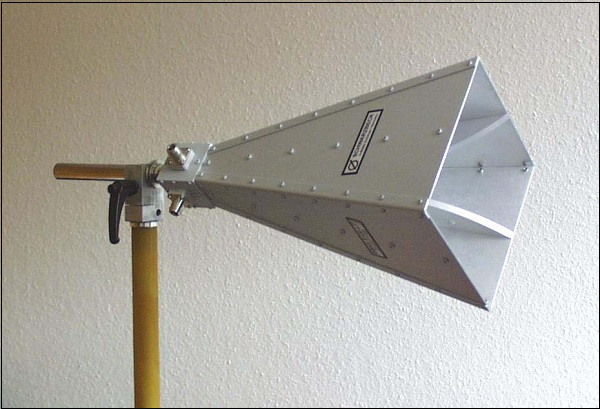

In horn antennas, flaring is very important because it enhances impedance matching; therefore, its performance is well improved. If proper impedance matching is not provided, it leads to huge power losses at the waveguide junction due to reflection into free space. For instance, in the case of a simple rectangular waveguide, when the impedance mismatch is too high, as much as 50% of the signal power may be reflected backward, thereby reducing system efficiency. Flaring ensures that the impedance changes gradually; this reduces these reflections, thus allowing more power to be radiated or received by the antenna.

In practice, say, for example, in satellite communication, this becomes rather indispensable. Consider, for instance, a horn antenna fitted to a satellite dish where the signal power is 100 watts. Because of an impedance mismatch, 30% of the power is reflected. Only 70 watts serve the purpose, while the rest 30 watts are wasted. If a properly flared horn is used, however, the loss by reflection can be brought down as low as 2-5%, so that the useful power ranges between 95 and 98 watts.

This can be in the instance of the 2.4 GHz or 5 GHz Wi-Fi systems, where impedance mismatch may weaken the signal, creating dead spots in areas that one is supposed to cover. Properly flared horn antenna minimizes loss of the signal for adequate wireless network range and reliability.

Pragmatically, this could be done with the selection of proper flare angle and length, with respect to the applied frequency and operating environment. In high gain horn antennas operating in radar systems where signals are in the range from 1 GHz to 40 GHz, flaring has to be calculated precisely. It could probably employ a larger flare angle at about 10 to 15 degrees to maximize the gain and also ensure smooth impedance transition while, in the case of lower-frequency applications, smaller flare angles may suffice.

Impedance Matching

In horn antennas, the flaring is very important in impedance matching, which can take a huge role in power transmission. For example, in a 10 GHz operating horn antenna with waveguide impedance of 377 ohms, the free-space impedance-without proper flaring impedance mismatch-could result in the reflection of up to 40% of the transmitted power back to its source. This reflection results in inefficiency, especially in high-power applications of satellite communication, as even a 10% reflection can lead to the loss of 10 watts from a 100-watt signal and greatly reduce system effectiveness.

In larger-scale systems, such as radar, power levels are even greater, sometimes reaching to the megawatt level for short bursts, and a poor impedance match may result in as much as 20% of the signal being reflected back into the system. At 1MW transmission this equates to 200kW of wasted power. By contrast, a well-flared horn antenna designed to transition smoothly from 377 ohms to the free-space impedance can reduce reflection losses below 1%, saving nearly all that power for effective transmission. This degree of impedance matching is particularly important for a radar system operating on the basis of precision and range.

Moreover, in commercial communication systems, such as cellular base stations, a reduction in impedance mismatch with horn antenna flaring reduces the necessity for additional amplification. A 5% power loss due to mismatch may sound insignificant initially, but this little inefficiency, when accumulated over time and across many units in a vast network, thus raises operational costs. For instance, in a base station transmitting 50 watts of power, a reflection of 5% implies that there is a waste of 2.5 watts per antenna. Now multiply this by 100 stations, and the overall wasted power can reach up to 250 watts, increasing electricity costs and decreasing system performance.

In space communication, flaring enhances impedance matching, particularly when budgetary limits are slim. Spacecraft generally have very tight constraints on how much power can be used; many spacecraft have less than 100 watts of available power for all systems combined. A horn antenna system that lost just 2% of its power due to impedance mismatch might well be the difference between a signal being successfully received or too weak to detect, particularly over long distances. For example, NASA’s Mars rovers operate within a total power envelope of 100 to 150 watts, so even an extremely slight mismatch of 2% could bleed off signal strength to 2 or 3 watts and impact communications over a long range to Earth.

Flaring also facilitates a continuous impedance match across multiple frequencies. Within Wi-Fi systems, for instance, the antennas are designed to act at such frequencies as 2.4 GHz or 5 GHz. A mismatch resulting in only a 3% loss of power in a Wi-Fi system can lead to noticeable degradation of a signal in coverage areas. If a WiFi access point transmits 1 watt (30 dBm) and has 3% reflection loss due to poor impedance matching, that would mean 0.03-watt equivalent loss in radiated power.

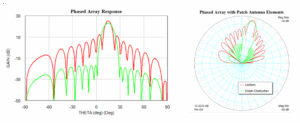

Beam Control

Thus, by flaring, horn antennas can be controlled in terms of radiation pattern, enabling much more focused beams with smaller side lobes. Taking into consideration those horn antennas applied in radar systems and operating within a frequency of about 10 GHz, critical beam control is an imperative. For instance, in the case of a 20-degree flare angle, the side lobes can be as low as -20 dB relative to the main lobe. If the flaring is improper, then side lobes remain at -10 dB approximately, which effectively allows more noise and interference to deteriorate signal quality. In those radar applications where precision is crucial, such as air traffic control, such advances in the control of beams can ensure more accurate target tracking.

Horn antennas are often used in satellite communications to keep a narrow beam width by not allowing much beam spread. For example, such a horn antenna, while operating at 12 GHz and with a 15-degree flare, can achieve a beam width of approximately 5 degrees. On the other hand, without proper flaring the beam width may increase up to 10 degrees or even more. This increased beam brings about reduced signal concentration and decreases the SNR. Such SNR reduction owing to a wider beam from 30 dB down to 20 dB can significantly deteriorate the quality of data transmission, especially in communication using a geostationary satellite, which requires precise control of beams to avoid interference with a neighboring satellite.

Beam control is also important in the Wi-Fi systems operating at 2.4 GHz or 5 GHz. This happens when the horn antennas have improper flare angles. The beam widths become nondirectional, which results in the overlapping of coverage areas. This in turn creates interference between adjacent access points. For example, an antenna with a 10-degree flare could give a focused 45-degree beam width, whereas a poorly designed antenna with no flare might have a beam width of more than 60 degrees. The wider beam increases interference and chops up to 20% off overall network throughput in congested areas; this may call for further investment in more sophisticated interference mitigation technologies like beamforming just to compensate.

In scientific applications, such as radio astronomy, beam control is critical for the detection of very weak signals from distant sources. If well designed, the flared horn antenna would have the capability of operating at 1 GHz with a flare angle of about 30 degrees, thus producing a narrow beam width as small as about 2-degree beam width, hence very precise targeting of the celestial body. Without the flare, the beamwidth could be as high as 5 degrees or more, which would lead to the dilution of the signal and possibly the missing of some important astronomic data. This in turn means that with beam control, the researcher will have the ability to increase the accuracy of their observation by factors of 2 to 3, which will translate into a better signal-to-noise ratio and, hence the ability to detect fainter sources in deep space.

In military applications, especially in electronic warfare systems, highly accurate beam control will be required so that specific enemy signals can be targeted without their detection. It can be designed to operate at 8 GHz with a 20-degree flare angle, which creates a very narrow beam width of about 3 degrees for more focused jamming of enemy communications. Poor flare in the antenna results in a 6-degree beam, which, in turn, reduces the effectiveness of the jamming signal as this spreads the signal over a larger area. This will result in lost focus that can cut jamming effectiveness by as much as 50%, hence compromising critical missions where precision is of the essence.

Gain Enhancement

Flaring in horn antennas is important in improving gains by offering a wider effective aperture size. For instance, considering a horn antenna operating at a frequency of 10 GHz with a flare that has an aperture of 1 meter, about 24 dBi gain is realized. Without the flare, the aperture might be reduced to 0.5 meters, resulting in a lower gain of about 18 dBi. This 6 dB gain difference will nominally enable the flared antenna to transmit or receive four times more power than the unflared version, since the gain increases logarithmically with aperture size. This becomes critical in applications like long-distance communications, where gain directly relates to longer transmission ranges and better signal strength.

In satellite communications, where tight beam shaping and high gains are necessary, a well-designed horn antenna with a 1.5-meter flare at 12 GHz can realize a gain as high as 30 dBi. A smaller, unflared horn might have only 0.75 meters aperture and would thus offer only 24 dBi of gain. The flared antenna would allow for double the transmission distance for the same power level, or alternatively half the power for the same distance. In fact, this 6 dBi of difference may bring important operational impacts. For example, a satellite broadcasting 100 watts via a flared horn with 30 dBi gain may comfortably serve an area twice as large as one from a similar horn rated at 24 dBi. In this way, communications are greatly extended with reduced power and operating expense.

Again, in radar applications, gain makes the difference in the detection of targets at distance and flare design thus becomes a critical issue in. In general, the gain of such an antenna for a 2-meter flare operating at 5 GHz can be as high as about 35 dBi, with the capability to detect objects at a range of 300 kilometers. A smaller, unflared horn operating at the same frequency but having a 1-meter aperture can yield a gain as low as about 29 dBi; thereby reducing the detection range to about 200 kilometers. This range difference of 100 kilometers may turn out to be vital in military and air traffic control operations, where the detection of objects needs to be done from a distance. More importantly, enhanced gain further suppresses the signal-to-noise ratio, hence making the radar system more reliable under cluttered environments.

Higher gain due to a flared horn antenna in Wi-Fi systems serves very efficiently in focusing the signal by reducing interference and increasing the range. Increased range-from 50 to 200 meters-of a Wi-Fi access point compared to a standard antenna at 10 dBi can be achieved by adding a flare to a horn antenna to increase gain to 18 dBi. This greater range can provide important economies by reducing the number of access points required to achieve area coverage. In large office buildings, for example, high-gain flared horns can cut the number of access points from ten to four and could cut installation and maintenance costs by more than half.

For deep space communications, where very high gain is required to transmit signals over multibillion kilometer ranges, a flared horn antenna with a large 3-meter aperture operating at 8 GHz would have a gain of 40 dBi. In comparison, an unflared horn radiating at the same frequency but with a 1.5-meter aperture and 34 dBi gain would be able to communicate over a range nearly 50% less. For example, the antennas of NASA’s Voyager spacecraft-which communicates over a distance of more than 20 billion kilometers-must have an extremely high gain (larger than 50 dBi) to send and receive extremely weak signals across the vastness of space.

Reduction of Phase Errors

With horn antennas, therefore, flaring can be applied to great advantage to minimize phase errors across the aperture. Such techniques can appreciably enhance the performance of systems where stringent requirements on signal transmission accuracy exist. For example, a horn antenna intended for satellite communications at 12 GHz would most likely present up to 30 degrees of phase error due to incomplete phase alignment. The effect is wavefront distortion, resulting in reduced quality, especially over long-distance links. These phase errors can be reduced below 5 degrees using a flare with an adequately computed aperture shape and size, thus yielding much cleaner wavefront and more accurate transmission of the signal.

Phase errors in radar may be fairly consequential. A typical radar system operating at 5 GHz would have its horn antenna with 20 degrees of phase error. This may cause a 10% reduction in signal strength due to destructive interference created by such errors, thereby reducing the detection range accordingly. For example, if the average range for the radar system is 300 kilometers, its power can be reduced by 10%, effectively reducing that range by 30 kilometers. A good horn introduction designed to keep phase errors less than 5 degrees might just allow the system to recover that lost range, hence ensuring improved detection capability against military or air traffic control targets.

The radio astronomy uses antennae, and phase errors across the aperture of such antennae distorts the images of objects under observation. Phase errors in excess of 15 degrees at the aperture will cause signal smearing in a horn antenna operating at 1 GHz. Such a small error will degrade the resolution of the final image by 20%. A flare designed to gradually shape the wavefront can reduce these phase errors below 3 degrees, upping the resolution by as much as 25%. This reduction of phase error is extremely important when one is involved in studies of faint objects in deep space, because even minor signal distortions can lead to highly degraded data quality. Without flaring, the astronomy team may have to compensate with more complex post-processing, which is very time-consuming and resource-intensive.

In Wi-Fi applications, phased errors could result in degradation of the signal quality, most especially when a multiple number of access points are employed within large environments. This would mean that if a horn antenna used in a Wi-Fi system at 2.4 GHz has phase error at 10 degrees, the misaligned wavefronts can cause signal interference and reduction in the data throughput up to 15%. For a network normally giving 100 Mbps, this reduction in throughput would mean the loss of 15 Mbps-something that would really impact user experience, especially in high-demanding environments such as offices or public spaces. A well-designed flared horn, however, can reduce phase errors to less than 2° and minimize signal distortion, ensuring throughput of very close to 100% efficiency.

In satellite tracking, where there is a great need for precise phase alignment to maintain communication over distance, phase errors in excess of 10° introduce signal distortion that negatively impacts the capability to maintain lock on a satellite. For example, a ground-based tracking station at 10 GHz may lose signal lock when the phase errors cause a deviation of 5% in the signal. By incorporating a flared horn design, the phase error becomes bounded from above by less than 2 degrees for the above-mentioned purpose of maintaining communications.